For long time readers of this blog, you know I’ve been obsessed with “signs of life” from communities which I call “community indicators.” I haven’t posted any recently, but something spurred me yesterday…

This past week I was very grateful to be a supporter of Dreamfish’s online retreat for their inaugural group of Dreamfish Fellows. The fellows will be taking leadership/stewardship roles in the Dreamfish network and communities over the next six month. As the first group, there was not only the exploration of a new group, but exploration of the roles they will play. All online, because cost and distance made a face to face a less “sustainable” option.

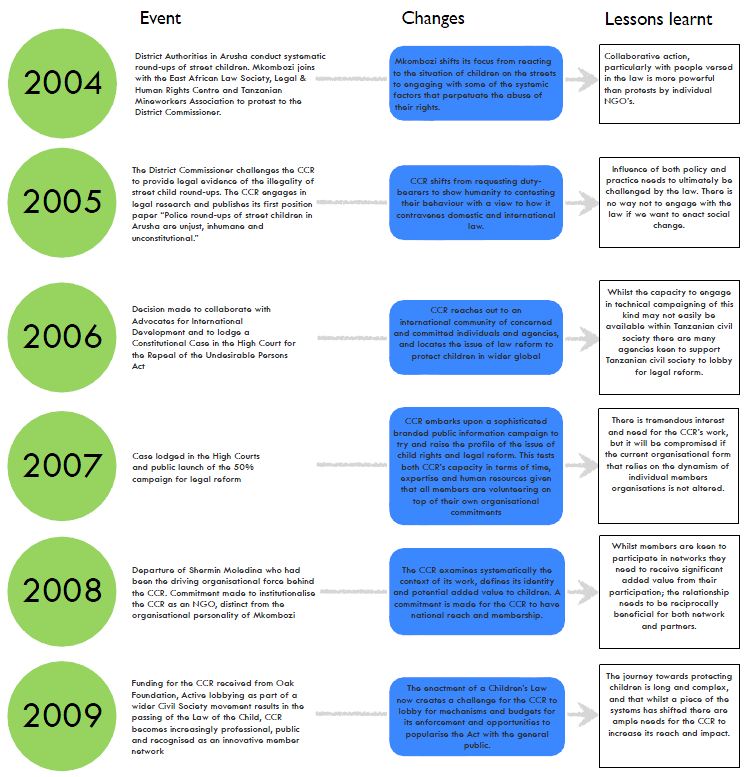

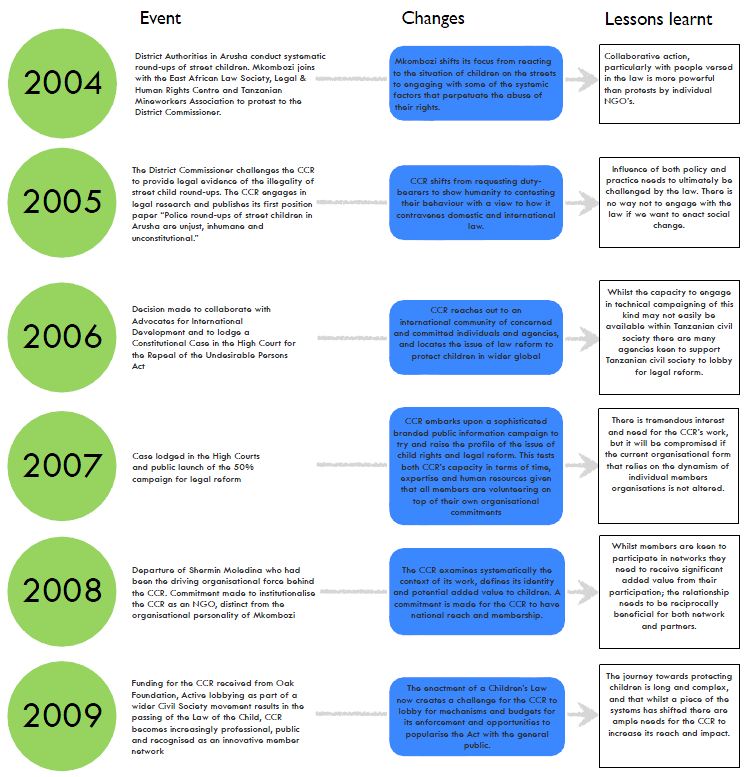

One of the Fellows, Kate McAlpine shared some of her work with the Caucus for Children’s Rights, in Tanzania

She shared a draft paper which I’ve still to read, but this graphic just “rang my bells.” You’ll have to click into it to read it, and I’ve included the PDF for ease.

This sure is a community indicator in my eyes, capturing (or “reifying” – definition below!) the learning of a community of practice over time. In this case, the indicator is learning over time, and a way to VISUALIZE and SHARE that learning. That is the bit that really stands out for me.)

Attribution: Kate McAlpine (2009) Caucus for Children’s Rights, Tanzania.

CCR Graphics_15Dec09

Any community indicators showing up in your life? Should we start thinking about network indicators?

Definition Time….Reification from Etienne Wenger (Wenger, E. (1998). Communities of practice. Learning, meaning and identity. Cambridge: Cambridge University Press.) gleaned by a paper by Hildreth, 2002

: …to refer to the process of giving form to our experience by producing objects that congeal this experience into ‘thingness’ … With the term reification I mean to cover a wide range of processes that include making, designing, representing, naming, encoding and describing as well as perceiving, interpreting, using, reusing, decoding and recasting. (Wenger, 1998: 58-59)